[ Overview || TCP | C++ | Python | REST | WebSocket || Models | Customization | Deployment | Licensing ]

The Mod9 ASR Engine consists of a Linux server, along with compatible models and support software, that enables clients to send commands and audio over a network and receive the written transcript of what was said in the audio.

This document is for the benefit of a client who communicates with the server using a relatively low-level protocol over a standard TCP network socket. For a higher-level client interfaces, refer to the Python SDK or REST API.

The accompanying deployment guide describes how an operator may install and manage the Engine.

- Protocol overview

- Server commands

- Sending audio data

- Request options

- Response fields

- Example usage

- Release notes

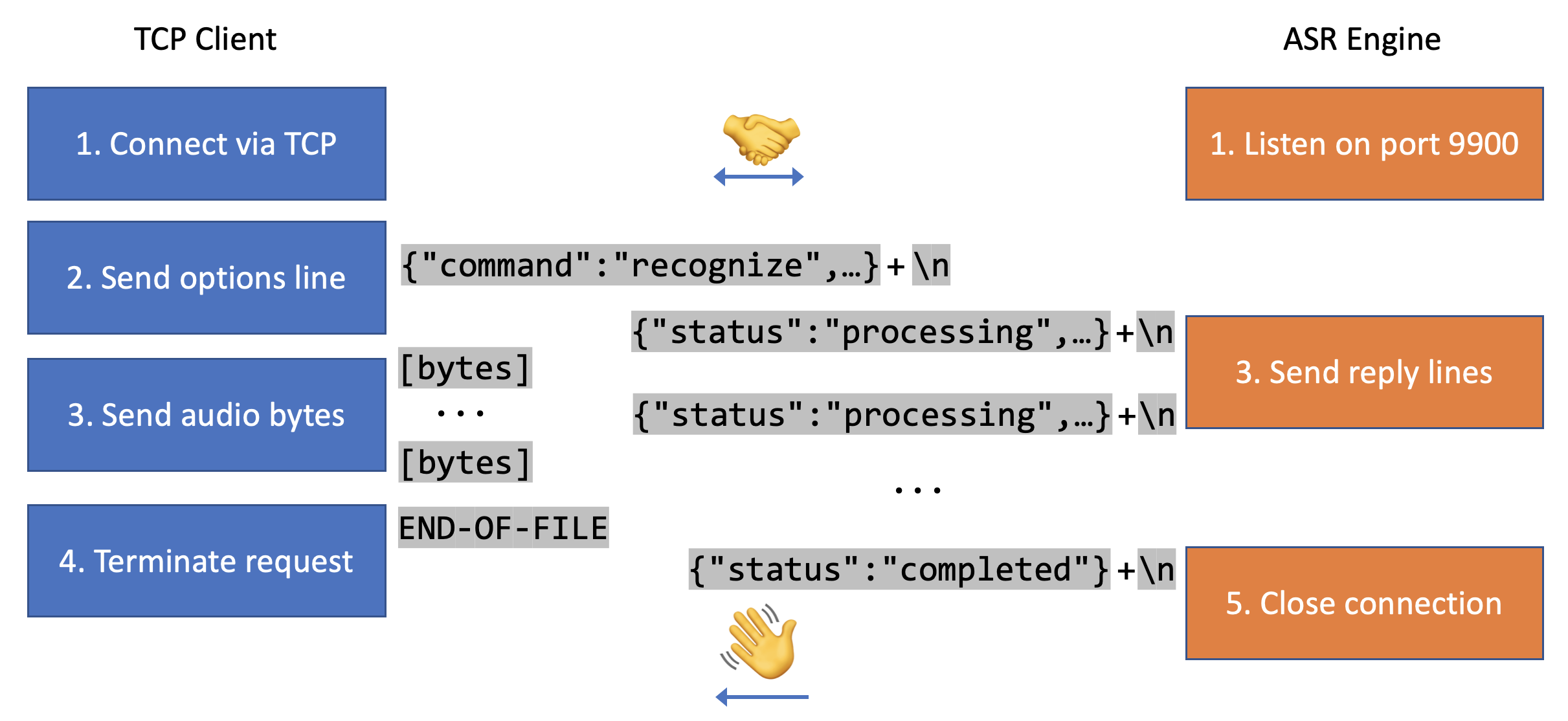

The custom application protocol used for communicating with the server is as follows:

-

The client establishes a TCP connection with the server, creating a socket for duplex communication.

-

The client sends a single-line JSON-formatted object indicating request options, terminated by a newline. This message's fields, and the acceptable values for various commands are described in the next sections. The server will wait 60 seconds for this initial JSON request message before timing out and closing the connection.

-

Next, two processes may happen simultaneously:

-

The server will send one or more newline-terminated messages to the client, each a single-line JSON-formatted object. Each server response message includes a

statusfield with values as described in the table below. -

Depending on the specified request options, the client may send audio data to the server as described in a section below: sending audio data. The server will processes this audio as it is received.

-

-

If any audio data was to be included in the request, the client must properly indicate its termination. Otherwise, the server will time out after 10 seconds elapse without receiving data as expected.

-

The server will respond with a final message that has a status of either

"completed"or"failed". The TCP connection will be closed by the server.

The server's response messages will always include a status field, with values interpreted as follows:

| Status | Description |

|---|---|

"completed" |

The request is done processing and the server will close the connection upon this final message. |

"failed" |

There was a problem, e.g. couldn't parse request options; the response message may describe details in the error field. The server will immediately close the connection upon this final message, even if the client is still sending audio data. |

"processing" |

All non-final messages will have this status. As it is processing, the server may send multiple messages with this status to indicate intermediate results and the server may be able to simultaneously receive audio data from the client. |

Additional fields may be included in the response messages.

For example, if the Engine encounters a non-fatal problem while processing, it may include a warning field in a non-final message that describes the problem when it occurs.

Since this protocol is intended for asynchronous duplex communication, care should be taken to implement clients that simultaneously read response messages while sending audio data (steps 3.i. and 3.ii. above).

Unlike a synchronous protocol such as HTTP, the client should not send the entire request (including potentially many bytes of audio data) before reading any of the response; this would only work for sending relatively small requests as otherwise TCP buffers may saturate.

We advise using a streaming TCP client such as nc, or client library such as our Python SDK.

The protocol requires the initial message sent by the client to be a single-line JSON object terminated by a newline. This will be parsed as request options that should specify a command directing the server to perform a particular task.

The request could set the command option to one of the string values described below; the default is "recognize".

| Command | Description |

|---|---|

"recognize" |

Start a speech recognition request in which audio data will be sent to the server. While receiving this audio, the server may simultaneously send results in a series of messages. This is the default command, and need not be specified. More details are provided below. |

"get-info" |

Report current resource utilization and server state. (Example) |

"get-models-info" |

Report information about currently loaded models. (Example) |

"get-version" |

Report the Engine's version string and build metadata. |

"lookup-word" |

Query an ASR model for information about a word. (Documentation) |

"pronounce-words" |

Automatically generate pronunciations of words. (Documentation) |

Several more commands are not allowed by default, since they might interfere with other clients' requests. The server must be specially configured by the operator to enable these commands:

| Command | Description |

|---|---|

"add-grammar" |

Embed a task-specific grammar to be recognized alongside a loaded ASR model. (Documentation) |

"add-words" |

Add words to a loaded ASR model. (Documentation) |

"bias-words" |

Change the weights associated with specific words in a loaded ASR model. (Documentation) |

"drop-grammar" |

Drop grammars added with "add-grammar". (Documentation) |

"drop-words" |

Drop words added with "add-words" (Documentation) |

"load-model" |

Load an ASR, G2P, or NLP model from the Engine's filesystem. (Example) |

"shutdown" |

Request that the server begin shutting down, perhaps gracefully. (Example) |

"unload-model" |

Unload an ASR, G2P, or NLP model, reducing the Engine's memory usage. (Example) |

These are rather advanced or esoteric:

| Command | Description |

|---|---|

"align-words" |

Utility to match ASR word intervals to formatted or edited transcripts. |

"detect-speech" |

Utility to perform Voice Activity Detection for quickly segmenting audio. |

"format-text" |

Use NLP models for capitalization, punctuation, and number conversion. |

"ping" |

Respond with the string "pong", e.g. for a health check. |

"score-wer" |

Utility to evaluate ASR accuracy as Word Error Rate, without normalization. |

After the client sends its request options (with command set to "recognize", or implied as the default command) as a single-line JSON-formatted object terminated by a newline, the server's initial reply will be nearly immediate.

This might indicate a status of "failed", terminating the request before receiving audio data from the client, and could happen if:

- the request options are improperly specified, or specify an unsupported configuration;

- the number of currently processing requests is above an operator-specified limit;

- the number of currently allocated threads is above an operator-specified limit;

- the currently allocated memory is above an operator-specified limit;

- no models are currently loaded, e.g. if the Engine is starting;

- or a (graceful) shutdown has been requested.

In these cases, it may help to query the server's current state and limits with the "get-info" command.

If the server's initial response message has a status of "processing", this indicates that the client may then proceed to send audio data to the server while simultaneously receiving further response messages.

The audio data must be formatted with a WAV header or the format request option should be set to "raw":

- For WAV-formatted data, audio sample rate, encoding, and channel count will be determined from the WAV header.

- For raw data, the preceding request options must specify the sample rate (e.g.

ratefield set to8000). - For raw data, the

encodingrequest option may be specified; it defaults to little-endian 16-bit linear PCM. - For raw data, the

channelsrequest option may be specified; it must be equal to the number of channels in the audio and it defaults to 1.

The supported PCM audio encodings include:

- compressed 8-bit A-law (

"a-law") and μ-law ("mu-law"/"u-law"); - linear 16-, 24-, and 32-bit precision little-endian signed integers (

"pcm_s{16,24,32}le"/"linear{16,24,32}"); - and linear 32-bit little-endian IEEE-754 floating point (

"pcm_f32le"/"float").

For more advanced media codecs, it is suggested that the operator or client use a tool such as ffmpeg to transcode to a supported audio format, sample rate, and encoding.

After determining the audio data's rate and encoding, which may require the Engine to have received and processed some initial bytes in order to parse a WAV header, the server may soon reply with a status of "failed" if:

- the initial header bytes of WAV-formatted audio cannot be parsed correctly;

- or the audio sample rate is not supported by the specified ASR model and the

resampleoption was set tofalse.

Otherwise, the server simultaneously processes the received audio data and sends JSON-formatted response messages:

- Each message will include a

statusfield with one of the values described in the protocol overview. - The client should simultaneously read server responses while it is sending data, rather than waiting to read only after it is done sending. This could be implemented as a multi-threaded process, or using select and poll system calls.

Eventually, the client should terminate its request:

- If the audio data is formatted as WAV, then the server will attempt to read the number of bytes that are indicated in its header.

However, the WAV format was not originally designed for streaming audio, since some data length is required to be in this header.

Tools such as

soxorffmpegmight set this to a very large value when producing streaming audio; this is considered an acceptable "workaround" as the client may terminate the audio data by other means. - Irrespective of the audio format, the client can send the byte sequence

"END-OF-FILE"when it has finished sending all of the audio data. This may indicate to the Engine that it will not receive the full number of bytes that were specified in the WAV header. Any arbitrary byte sequence may be specified as theeofrequest option; it should be chosen so that it would be unlikely, if not impossible, to appear as a sequence of bytes in the audio data. - Consequently: the client doesn't need to know how much audio data it will send; this enables real-time streaming.

- If the Engine has not received the end-of-file byte sequence, nor received the number of bytes indicated in a WAV-formatted header, then it will consider the request to be timed out after 10 seconds in which no additional audio data is received.

The server will send a final message with a status of

"failed"and immediately close the connection.

After the client has terminated its request, the server should reply relatively soon after with a final message having a status of "completed" or "failed", and then close the connection.

NOTE: the Engine is designed to implement buffering of the received audio data, rather than storing all of the received audio data in memory, so the client may experience network transmission rates that appear to be throttled to match the processing speed of the Engine. The Engine is designed to perform much faster than real-time for streaming audio, or distribute batch processing across multiple threads for pre-recorded audio; so this throttling would only be experienced for pre-recorded audio sent over a very fast network to a server with limited compute capacity.

NOTE: The client should not shut down the writing side of its socket after sending the audio data. The use of such a TCP half close is not recommended as connections may be unexpectedly broken by routers or operating systems that implement aggressive timeouts. To prevent ambiguous behavior, the Engine will abort and fail the request upon receiving a TCP half-close.

For the default "recognize" command, many additional request options can be included in the first line of JSON.

These request options may specify parameters of the audio data, informing the Engine to receive it appropriately.

| Option | Type | Default | Description |

|---|---|---|---|

channels |

number | 1 | The number of channels present in the audio can be specified if the format is "raw" and cannot be specified if it is "wav". |

format |

string | "wav" |

File format of audio data, which may be "wav" or "raw". If the format is "wav", then the encoding, rate, and channels options will be inferred from the WAV file's header, along with the expected audio duration. |

encoding |

string | "pcm_s16le" |

The audio encoding must be specified if the format is "raw" and cannot be specified if it is "wav". Accepted values include "linear{16,24,32}"/ "pcm_s{16,24,32}le", "float" / "pcm_f32le", "mu-law" / "u-law", and "a-law". |

rate |

number | N/A | The audio sample rate (in Hz) must be specified if the format is "raw" and cannot be specified if it is "wav". It is recommended that this match the rate of audio used to train an ASR model. |

resample |

boolean | true |

When set to false, the Engine will not process audio with a sample rate that differs from the ASR model's rate. |

Note that WAV files are not designed for streaming use cases; however, some streaming tools may create WAV files with a bogus or overly long duration specified in the header.

In these scenarios, or when sending audio with "raw" format, the following request options may be helpful for terminating the request when a client is done sending audio data.

| Option | Type | Default | Description |

|---|---|---|---|

content-length |

number | N/A | Number of audio data bytes to read in this request, i.e. after the JSON options and newline. If the format is "wav", the audio duration specified in the WAV header will be ignored. |

eof |

string | "END-OF-FILE" |

This special byte sequence can be sent to signal that the client has finished sending data. If the format is "wav", this will take precedence over the audio duration specified in the WAV header. |

If the Engine has loaded multiple ASR models, the client can specify which is to be used in processing audio.

To obtain information about the available models, use the get-models-info command.

| Option | Type | Default | Description |

|---|---|---|---|

asr-model |

string | The name of the ASR model loaded earliest, i.e. first as listed by the get-models-info command. |

The name of the ASR model to be used when processing this request. |

The following options request additional data to be included in the recognition results:

| Option | Type | Default | Description |

|---|---|---|---|

transcript-formatted |

boolean | false |

Apply capitalization, punctuation, number-formatting, etc. |

transcript-confidence |

boolean | false |

Compute a confidence score for each recognition result. |

word-confidence |

boolean | false |

Compute a confidence score for each word in recognition results. |

word-intervals |

boolean | false |

Add word-level timestamps to recognition results. |

phone-intervals |

boolean | false |

Add phone-level timestamps to recognition results. Requires word-intervals. |

phrase-intervals |

boolean | false |

Segment transcript into likely phrases, and return the intervals. |

transcript-intervals |

boolean | false |

Add timestamps for endpointed or batch-segmented transcripts. |

The server can provide alternative hypotheses to recognition results at the word, phrase, and transcript levels.

| Option | Type | Default | Description |

|---|---|---|---|

word-alternatives |

integer | 0 |

Maximum alternative recognition hypotheses for each word in a transcript. If set to -1, there is no limit. If set to 0, disable. |

phrase-alternatives |

integer | 0 |

Maximum alternative recognition hypotheses for each phrase (multiple adjacent words) in a transcript. If set to 0, disable. |

transcript-alternatives |

integer | 0 |

Maximum alternative recognition hypotheses for each transcript in a result. If set to 0, disable. |

The Engine supports two modes for speech recognition:

- In the default mode, the Engine will perform ASR on the audio stream with a single processing thread. This mode is best suited for live audio streams that are processed in real-time; the

latency,endpoint, andpartialoptions can be used to control how results are segmented and how quickly results are sent back from the Engine. - In batch mode, the Engine will efficiently pre-process the audio stream into speech segments, and then perform ASR on these segments concurrently using multiple threads. Segments can be specified by the client in the request options or generated internally by the Engine. This mode is intended for pre-recorded audio files; however, it could also be used with real-time streams to reduce the computational load if the audio has a significant amount of silence.

The following options are only valid in default real-time mode.

| Option | Type | Default | Description |

|---|---|---|---|

endpoint |

boolean | true |

If true, the Engine determines the end of a spoken utterance based on silence and confidence criteria. If false, the audio input is considered as one utterance. |

endpoint-rules |

object | built-in | Advanced customization of endpoint rules. |

latency |

number | 0.24 |

Chunk size for ASR processing (in seconds). Low values increase CPU usage; high values degrade endpointing. |

partial |

boolean | false |

Send intermediate ASR results while an utterance has not yet reached its endpoint. This is affected by latency. |

transcript-formatted-partial |

boolean | false |

Include formatted transcripts for intermediate results, when partial and transcript-formatted are also enabled. Otherwise, formatted transcripts are only produced after endpointing an utterance. |

The following options are used to control batch mode which is enabled by setting batch-threads to a non-zero value.

| Option | Type | Default | Description |

|---|---|---|---|

batch-threads |

integer | 0 | Number of concurrent threads to use for processing this request. A setting of -1 will use as many threads as possible, subject to operator-configured limits. A setting of 1 is not parallelized but still performs speech segmentation and may speed up processing of audio with large amounts of silence. A setting of 0 disables batch processing and performs streaming recognition with endpointing. |

batch-intervals |

array of intervals | N/A | Specific intervals for the Engine to process. If set, the Engine will only process the supplied intervals. If this is not set, the Engine will perform speech segmentation of the audio, based on voice activity. This must be a sorted array of non-overlapping intervals, for example [[3.2, 10.8], [14.0, 35], [37.9, 46.1]]. Note: if batch-intervals is specified without setting batch-threads, the Engine will enable batch processing with 1 thread. |

batch-segment-min |

number | 0.0 | Minimum duration in seconds of a segment. This is applicable only when batch-intervals are not supplied. |

batch-segment-max |

number | 45.0 | Maximum duration in seconds of a segment. This is applicable only when batch-intervals are not supplied. |

The ASR results can be customized with phrase biasing to cause user-specified word sequences to become more or less likely to be recognized.

For example, {"phrase-biases":{"can do":5,"cannot":-5}} positively boosts recognition of the phrase "can do",

while also decreasing the likelihood of "cannot".

| Option | Type | Default | Description |

|---|---|---|---|

phrase-biases |

object | N/A | Mapping of phrase to positive or negative bias values. |

The Engine can be customized to perform ASR with a client-specified grammar and lexicon, e.g. for directed dialog or voice command tasks, in requests specifying the grammar and words options; see custom grammars for more details.

| Option | Type | Default | Description |

|---|---|---|---|

grammar |

object | N/A | Specify a custom grammar for this request. |

words |

array of objects | N/A | Specify a custom lexicon for this request; required with grammar. |

The following advanced options might make sense to experts, particularly if familiar with Kaldi ASR:

| Option | Type | Default | Description |

|---|---|---|---|

decode-beam |

number | 0 |

Applicable if operator enables --decoder.configurable. |

decode-lattice-beam |

number | 0 |

Applicable if operator enables --decoder.configurable. |

decode-max-active |

number | 0 |

Applicable if operator enables --decoder.configurable. |

decode-mbr |

boolean | false |

The transcript will be optimized for Minimum Bayes' Risk, but phrase and transcript alternatives may be inconsistent with this. |

decode-prune-interval |

number | 0 |

Applicable if operator enables --decoder.configurable. |

decode-prune-scale |

number | 0 |

Applicable if operator enables --decoder.configurable. |

dither |

number | 1.0 |

Used to ensure numerical stability of FFT. |

ivector-silence-weight |

number | 1.0 |

Setting this close to zero can help for ASR models that use ivectors in noisy conditions. |

lm-scale |

number | 1.0 |

The weight to give language model relative to the acoustic model. A value greater than 1.0 will cause the output to be more matched to common word usage patterns, but less matched to the speech sounds; a value between 0.0 and 1.0 will do the reverse. It can be helpful to tune lm-scale if the audio or speech quality is poor. |

resample-mode |

string | "best" |

Override with "fast"/"faster" (smaller sinc windows), or "fastest" (linear). |

seed |

number | 4499 |

Each request can have its own RNG seeded deterministically, for reproducible results. |

speed |

number | 5 |

Set the speed of the recognizer. This implements a tradeoff between speed and accuracy, with 1 being the slowest but most accurate, and 9 being the fastest and least accurate. (It sets various decoder beams that affect the lattice density.) |

transcript-alternatives-bias |

boolean | false |

In transcript-alternatives (i.e. N-best), include relative AM and LM cost differences. |

transcript-cost |

boolean | false |

Report AM and LM costs for each utterance. |

transcript-likelihood |

boolean | false |

Report AM likelihood over speech frames. |

wip |

number | 0.0 |

The word insertion penalty, an additive cost per word. Positive values cause fewer words to be recognized; negative values cause more words to be recognized. It can help to tune wip in situations where short words are over-generated or if difficult words are deleted. (cf. sip-rate) |

word-alternatives-confidence |

boolean | false |

If word-alternatives is requested, include the relative confidence of each word alternative. (i.e. MBR sausage posteriors.) |

word-alternatives-confidence-min |

number | 0.0 |

If word-alternatives is requested, include words with higher confidence than this. |

word-cost |

boolean | false |

Report AM costs over each word interval. |

word-likelihood |

boolean | false |

Word-level AM costs normalized per-frame. |

The following very advanced options are rather esoteric, and hereby documented for completeness:

| Option | Type | Default | Description |

|---|---|---|---|

cats-m |

number | 10 |

How many phrase alternatives will be ranked by cost, before selecting for arc diversity. |

cats-n |

number | 50 |

How many phrase alternatives will be considered, selecting for arc diversity. |

g2p-model |

string | (ASR-specific) | G2P model used for pronunciation generation. The default is selected to best match the ASR model's indicated language and must exactly match its set of phonemes. |

g2p-cost |

boolean | false | When generating pronunciations, add a max-normalized cost for alternative pronunciations. |

g2p-options |

object | (various) | Override the default options used for pronunciation generation. From Phonetisaurus: nbest, beam, threshold, accumulate, pmass. Additional options include: max-initialism, use-lexicon, split-words. |

nlp-model |

string | (ASR-specific) | NLP model used for transcript formatting. The default is selected to best match the ASR model's indicated language metadata. |

silence-probability |

number | 0.5 |

Probability of optional silence transitions before and after each word. |

sip-rate |

number | 0.0 |

The silence insertion penalty, specified as an additive cost per second. This can help in situations where words are deleted in favor of relatively long silences. (cf. wip) |

sip-rate-custom |

number | 0.0 |

Similar to sip-rate, but applied in decoding graph construction not lattice rescoring. |

phrase-alternatives-bias |

boolean | false |

If phrase-alternatives is requested, include relative AM and LM cost differences. |

phrase-cost |

boolean | false |

Similar to word-cost. |

phrase-likelihood |

boolean | false |

Similar to transcript-likelihood. |

transcript-intervals-decoded |

boolean | false |

If true, report end times strictly per frames that were processed by ASR, not including any extra samples that were received. |

transcript-silence |

boolean | true |

If false, filter out results if the endpointed transcript would have been the empty string (""). This is mostly cosmetic. |

word-silence-confidence-max |

number | 0.0 |

If word-confidence or word-alternatives are requested, do not include silence as a de facto "word" (represented as "") if its confidence is above this threshold. |

word-silence-duration-min |

number | 0.01 |

If word-confidence or word-alternatives are requested, do not include silence if its duration is below this threshold. |

While processing audio data, the server may respond with one or more JSON-formatted messages representing the ASR result. Some of these messages may describe the result in the context of a "segment" -- a temporal interval within the audio data. Some of the response fields are described in the table below.

| Field | Type | Description |

|---|---|---|

status |

string | Either "processing", "completed", or "failed" as in the protocol overview

|

request_id |

string | Identifier used to reference the current request; helpful for debugging against operator logs. |

final |

boolean | For the current segment, this will be false if it has not yet been endpointed. |

interval |

pair of numbers | Start and end times of current segment; seconds from the start of the audio. This is only returned if the transcript-intervals option is set to true. |

result_index |

integer | A counter used to reference the current segment; useful with partial results. |

channel |

string | The audio channel of the current segment. |

transcript |

string | The ASR output (i.e. "1-best" hypothesis). |

transcript_formatted |

string | (Optional) formatted transcript that includes punctuation, capitalization and number formatting. |

confidence |

number | (Optional) value between 0 and 1 denoting the expected accuracy. This is only returned if the transcript-confidence option is enabled. |

words |

array of objects | (Optional) more detailed information about each word in the transcript

|

words_formatted |

array of objects | (Optional) formatted words from transcript_formatted with intervals aligned from words. |

alternatives |

array of objects | (Optional) alternative recognition hypotheses for the entire segment. |

phrases |

array of objects | (Optional) more detailed information about each phrase in the segment. |

reached_exit |

boolean | (Optional) for custom grammars, if a graph exit state is reached at the final time step. |

Note that confidence, words, phrases, alternatives, and reached_exit information are only computed after segments have been endpointed (i.e. final is true).

The words list is used to present word-level information. The objects in the list can have the following fields.

| Field | Type | Description |

|---|---|---|

word |

string | Word. |

interval |

pair of numbers | Start and end times for each word (not including silences before or after). This is only returned if the word-intervals field is set to true. |

phones |

array of objects | Contains phone and interval of each phone in the word. This is only returned if word-intervals and phone-intervals are set to true. |

confidence |

number | A value between 0 and 1 (probability of this word among alternative hypotheses). This is only returned if the word-confidence field is set to true. |

alternatives |

array of objects | A ranked list of word-level alternatives. This is only returned if the word-alternatives field is greater than 0. |

The objects in the words alternatives list have the following fields.

| Field | Type | Description |

|---|---|---|

word |

string | Word. |

confidence |

number | A value between 0 and 1 (probability of this word among alternative hypotheses). |

The words_formatted list is used to present meaningful word-level interval information when both the transcript-formatted and word-intervals options are requested.

The aligned time intervals provide a correspondence between the transcript_formatted and words response fields.

The Transcript alternatives field is a ranked list of N-best alternative recognition hypotheses for the entire segment transcript.

Each object in the list has the following fields.

| Field | Type | Description |

|---|---|---|

transcript |

string | Recognition hypothesis |

bias |

object | Object containing relative costs for this alternative. This is only returned if the transcript-alternatives-bias option is true. |

The bias object has the following fields.

| Field | Type | Description |

|---|---|---|

am |

number | Acoustic model cost for this hypothesis relative to the best hypothesis. Lower is better. |

lm |

number | Language model cost for this hypothesis relative to the best hypothesis. Lower is better. |

The am and lm biases are normalized so that the best-scoring alternative has am and lm biases of 0.

The bias-words command can be used to modify the bias scores of words in an ASR model's vocabulary.

This can change the ordering of alternatives, and may change the 1-best transcript.

For more insight, see the bias-words docs.

The phrases list presents information about the phrases in the segment. The objects in the list are returned

in sequential order and can have the following fields.

| Field | Type | Description |

|---|---|---|

phrase |

string | The recognition hypothesis for this phrase. |

interval |

pair of numbers | Start and end time for the phrase interval. This is only returned if the phrase-intervals field is set to true. |

alternatives |

array of objects | A ranked list of alternative recognition hypotheses for the phrase. This is only returned if the phrase-alternatives field is greater than 0. |

The output of the phrase alternatives list is very similar to the format of the transcript alternatives.

Each of the objects in the phrase alternatives list has these fields.

| Field | Type | Description |

|---|---|---|

phrase |

string | The phrase hypothesis |

bias |

object | Object containing relative costs for this alternative. This is only returned if the phrase-alternatives-bias option is true. |

The bias object has the same fields as in the transcript alternatives list.

The examples below use several command-line tools:

-

curl: an HTTP client, useful for downloading example files. -

nc: a.k.a.netcat, a utility that can create TCP connections and interact via stdin/stdout. -

jq: a JSON parser, useful for filtering and formatting server responses. -

sox: a multi-platform general purpose command line tool for processing audio.

These are generally available for installation via package managers on most Linux systems.

curl -sLO mod9.io/hi.wav

(echo '{"command":"recognize","transcript-formatted":true}'; cat hi.wav) | nc $HOST $PORTThis example downloads a short 8kHz audio file, and passes it directly to the server -- after first writing the request options as a single-line JSON object.

The variables $HOST and $PORT should be set to point to the hostname and port the server is running on.

If the server's default ASR model isn't 8kHz, the server will resample the audio to match sampling rate of the default model.

The results are printed as follows:

{"request_id":"1234","status":"processing"}

{"final":true,"result_index":0,"status":"processing","transcript":"hi can you hear me","transcript_formatted":"Hi. Can you hear me?"}

{"status":"completed"}In the variant below, note that the default command does not need to be specified.

(echo '{"transcript_formatted":true,"word-intervals": true}'; cat hi.wav) | nc $HOST $PORT \

| jq -r 'select(.final)|.words_formatted[]|"\(.interval[0]): \(.word)"'The response now includes time intervals for each word.

The jq tool parses the start times and "formatted" (i.e. capitalized, punctuated) display for each word.

0.09: Hi.

0.33: Can

0.51: you

0.57: hear

0.69: me?

This example operates in a very similar manner to the previous example, except that it records audio from your default microphone or audio source rather than from a file.

Since the default recording settings (e.g. the sampling rate) may not be compatible with the server, we use sox to convert the audio.

This example also demonstrates passing raw PCM (with an explicit sample rate), receiving output for partial segments, and word-level confidences with the final transcript.

(echo '{"format": "raw", "rate": 8000, "encoding": "linear16", "word-confidence": true, "partial": true}'; \

sox -q -d -V1 -t raw -r 8000 -b 16 -e signed -c 1 - ) | nc $HOST $PORTThe output comprises several lines of intermediate results before a final result is returned with confidences:

{"request_id":"1234","status":"processing"}

{"final":false,"result_index":0,"status":"processing","transcript":"test"}

{"final":false,"result_index":0,"status":"processing","transcript":"testing"}

{"final":false,"result_index":0,"status":"processing","transcript":"testing one"}

{"final":false,"result_index":0,"status":"processing","transcript":"testing one too"}

{"final":false,"result_index":0,"status":"processing","transcript":"testing one two three"}

{"final":false,"result_index":0,"status":"processing","transcript":"testing one two three four"}

{"final":true,"result_index":0,"status":"processing","transcript":"testing one two three four","words":[{"confidence":1.0,"word":"testing"},{"confidence":0.9986,"word":"one"},{"confidence":0.993,"word":"two"},{"confidence":1.0,"word":"three"},{"confidence":0.9983,"word":"four"}]}

...We can also use WAV format, in which case we can omit the format and rate options:

(echo '{"partial": true, "word-confidence": true}'; \

sox -q -d -V1 -t wav -r 8000 -b 16 -e signed -c 1 - ) | nc $HOST $PORTcurl -sLO mod9.io/SW_4824_B.wav

(echo '{"batch-threads":5}'; cat SW_4824_B.wav) | nc $HOST $PORTThis example downloads a 5-minute 8kHz audio file and passes it to the server for processing in batch mode with 5 threads. The audio will be segmented and processed by 5 concurrent threads. The results will be output in order as they become ready. The results are printed to stdout as follows:

{"request_id":"1234","status":"processing"}

{"final":true,"result_index":0,"status":"processing","transcript":"uh i bought a ninety two honda civic i was looking more for a smaller type car"}

{"final":true,"result_index":1,"status":"processing","transcript":"ah so it was between the the honda civic into saturn's"}

{"final":true,"result_index":2,"status":"processing","transcript":"really uh-huh"}

...The server can produce formatted transcripts with capitalization and punctuation.

The following command extends the previous streaming audio example by setting transcript-formatted:

(echo '{"transcript-formatted": true}'; \

sox -q -d -V1 -t wav -r 8000 -b 16 -e signed -c 1 - ) | nc $HOST $PORT | \

jq -r 'select(.final)|"\(.transcript) --- \(.transcript_formatted)"'we haven't talked in a while how are you doing --- We haven't talked in a while. How are you doing?

pretty good --- Pretty good.

uh [laughter] --- Uh

yeah exactly --- Yeah, exactly.

The following example requests the Engine to respond with up to 3 hypotheses for each word in the recognition, and include a confidence score for each alternative.

curl -sLO mod9.io/SW_4824_B.wav

(echo '{"word-alternatives": 3, "word-alternatives-confidence":true}'; cat SW_4824_B.wav) | nc $HOST $PORT | jq .The results for each segment are returned in the same JSON object. The word hypotheses are returned with the "words"

key as a list of JSON objects, one for each word.

...

{

"final": true,

"result_index": 0,

"status": "processing",

"transcript": "so what did you buy a i bought a ninety two honda civic i was looking more for a smaller type car",

"words": [

{

"alternatives": [

{

"confidence": 0.9945,

"word": "so"

},

{

"confidence": 0.003,

"word": "[laughter]"

},

{

"confidence": 0.0014,

"word": "[noise]"

}

],

"word": "so"

},

...

]

}

...The phrase alternative algorithm splits each segment into consecutive, disjoint phrases.

The following example requests the Engine to respond with up to 3 hypotheses for each phrase,

and to return the bias scores for each hypothesis.

curl -sLO mod9.io/SW_4824_B.wav

(echo '{"phrase-alternatives": 3, "phrase-alternatives-bias":true}'; cat SW_4824_B.wav) | nc $HOST $PORT | jq .The results for each segment are returned in the same JSON object, as a list of objects under

the "phrases" key. Each result object contains the phrase and a list of "alternatives".

...

{

"final": true,

"phrases": [

{

"alternatives": [

{

"bias": {

"am": 0,

"lm": 0

},

"phrase": "so"

},

{

"bias": {

"am": 10.828,

"lm": -1.791

},

"phrase": ""

},

{

"bias": {

"am": -4.232,

"lm": 13.393

},

"phrase": "sew"

}

],

"phrase": "so"

},

{

"alternatives": [

{

"bias": {

"am": 0,

"lm": 0

},

"phrase": "what did you"

},

{

"bias": {

"am": 0,

"lm": 5.089

},

"phrase": "what did u"

},

{

"bias": {

"am": 5.188,

"lm": 0.295

},

"phrase": "what would you"

}

],

"phrase": "what did you"

},

...

]

}

...The following example requests the Engine to respond with up to 3 hypotheses for each transcript.

curl -sLO mod9.io/SW_4824_B.wav

(echo '{"transcript-alternatives":3}'; cat SW_4824_B.wav) | nc $HOST $PORT | jq .The alternatives are returned as a list of objects under the "alternatives" key.

...

{

"alternatives": [

{

"transcript": "so what did you buy a i bought a ninety two honda civic i was looking more for a smaller type car"

},

{

"transcript": "so what'd you buy a i bought a ninety two honda civic i was looking more for a smaller type car"

},

{

"transcript": "so what did you buy uh i bought a ninety two honda civic i was looking more for a smaller type car"

}

],

"final": true,

"result_index": 0,

"status": "processing",

"transcript": "so what did you buy a i bought a ninety two honda civic i was looking more for a smaller type car"

}

...This command requests that the server display information about loaded models:

nc $HOST $PORT <<< '{"command": "get-models-info"}' | jq{

"asr_models": [

{

...

"name": "en-US_phone",

"rate": 8000,

...

},

{

...

"name": "en_video",

"rate": 16000,

...

}

],

...

"status": "completed"

}The Engine has loaded an ASR model named "en-US_phone" that accepts 8kHz audio, as well as an ASR model named "en_video" that accepts 16kHz audio.

The first model in the asr_models list is the "default" model to be used if a asr-model field isn't specified in a recognition request.

In this case, it's the "en-US_phone" model.

To specify that a request use the "en_video" model:

curl -sLO mod9.io/greetings.wav

(echo '{"asr-model":"en_video"}'; cat greetings.wav) | nc $HOST $PORTAll else being equal (e.g. noise levels, accents), it's best to use an

ASR model whose sampling rate is as close as possible to the native

(original) sampling rate of the audio. By default, the Engine will

resample the audio to match the ASR model's sampling rate using an

algorithm that is on the faster side of the possible speed/accuracy

trade offs. You can modify these defaults by setting the request

options resample and resample-mode as described above.

To load a model into the Engine, use the command load-model with

the name of the model to be loaded in the "asr-model", "g2p-model", or "nlp-model" options.

To unload a model, use the command unload-model with the name of the

model to be unloaded in the "asr-model", "g2p-model", or "nlp-model" option.

NOTE: loading and unloading models is disabled if the Engine was not started with the --models.mutable option.

Examples:

nc $HOST $PORT <<< '{"command": "load-model", "asr-model": "mod9/en-US_phone-smaller"}'

nc $HOST $PORT <<< '{"command": "unload-model", "asr-model": "en_video"}'Note that the default ASR model to be used in a recognition request is the one that is loaded earliest. If you unload the default ASR model, a remaining ASR model that was loaded the earliest becomes the new default.

nc $HOST $PORT <<< '{"command": "lookup-word", "word": "euphoria", "asr-model":"en_video"}' | jqThe server will respond with a message indicating whether the requested word is in the model's vocabulary.

{

"bias": 0,

"found": true,

"asr_model": "en_video",

"status": "completed",

"word": "euphoria"

}If the asr-model field is omitted, the Engine will check the first model in the "asr_models" list from the response to the "get-models-info" command.

nc $HOST $PORT <<< '{"command": "get-info"}' | jqThis command requests that the server display information about its current usage statistics and configuration settings:

{

"build": "cb1e7aa.211215.8817678db22f.centos7.gcc9.jemalloc5.mkl",

"cpu_percent": {

"available": 6391,

"limit": 6400,

"used": 0

},

"hostname": "mod9.io",

"license_type": "custom",

"limit": {

"read_kibibytes": {

"line": 1024,

"stream": 16,

"wav_header": 1024

},

"read_timeout": {

"line": 60,

"stream": 10

},

"throttle_factor": 2,

"throttle_threads": 4

},

"memory_gibibytes": {

"available": 33,

"headroom": 10,

"limit": 64,

"peak": 31,

"used": 31

},

"mkl": {

"cache_gib": {

"max": 1,

"used": 0,

},

"data_type": "f32",

"range_scale": 0.5,

"reproducibility": false,

},

"models": {

"indexed": false,

"loaded": {

"asr": 26,

"g2p": 1,

"nlp": 2

},

"mutable": false

},

"requests": {

"active": 0,

"failed": 0,

"limit": -1,

"mutable": {

"completed": 0,

"limit": -1

},

"received": 2

},

"shutdown": {

"allowed": false

},

"state": "ready",

"status": "completed",

"threads": {

"active": 0,

"allocated": 0,

"limit": -1,

"limit_per_request": 64

},

"uptime_seconds": 83,

"version": "2.0.0"

}Some of the information reported by this command is only useful to a relatively advanced client, perhaps someone with an understanding of the operator's perspective and settings.

Note that the "active" threads represent those that are currently in the relatively compute-intensive processing stage of ASR decoding.

This count does not include threads that might be in other compute-intensive processing stages (e.g. transcript formatting or model loading).

It does not count the relatively lightweight commands or pre-processing stages such as audio resampling or voice activity detection.

Also, an "active" thread might not represent 100% CPU utilization, e.g. if it is in online (not batch) mode and awaiting real-time audio input.

Note also that the "allocated" threads count includes those which are "active",

as well as threads that could be "active" but might be otherwise waiting in a thread pool.

nc $HOST $PORT <<< '{"command": "shutdown", "timeout": 30.5}' | jqThe Engine will stop accepting new requests, and will wait up to 30.5 seconds for all active requests to finish. If there are still active requests after 30.5 seconds, the Engine will forcefully exit with a nonzero exit code.

If timeout is set to a negative value, the Engine will attempt to gracefully shutdown, waiting indefinitely for active requests to finish.

The Engine will immediately respond with a message acknowledging the request, but will not close the connection with the client until it has shut down.

If there are multiple shutdown requests, the Engine will exit as soon as any one of them reaches its timeout.

Here is a curated summary of significant changes that are relevant to ASR Engine clients (cf. operator release notes):

-

2.0.0 (2025 Oct ??):

- TODO: E2E model usage documentation.

-

1.9.9 (2025 Sep 25):

- Add

silence-probabilityrequest option, affecting whether silence appears. - Add

sip-rate-customrequest option; likesip-rate, but much more efficient. - Both apply to custom lexicons or grammars, or the

add-{grammar,words}commands. - Add

strictsub-option tog2p-options, to error on unrecognized characters.

- Add

-

1.9.8 (2025 Jul 15):

- Add

phones-intervalrequest option. - Minor changes to handling of

g2p-options.

- Add

-

1.9.7 (2025 May 27):

- Improved experimental release of Whisper model functionality; ask Mod9 for testing support.

- Add

reached_exitresponse field, applicable to graph decoding with custom grammars. - Add

decode-{beam,lattice-beam,max-active,prune-interval,prune-scale}options. - Fix custom lexicons to allow more disambiguation of pronunciations.

-

1.9.6 (2024 Apr 30):

- Undocumented experimental release of Whisper model functionality; ask Mod9 for testing support.

-

1.9.5 (2024 Mar 22):

- Various upgrades and improvements, should not affect functionality w.r.t clients.

-

1.9.4 (2023 May 13):

- Add German to ASR models from Mod9:

- Similar to TUDA but more robust to noise and acoustic conditions.

- Packaged with a compatible G2P model to enable customization.

- Add various ASR models from Vosk:

- Upgraded: Chinese, Esperanto, Italian, Russian, Spanish, and Vietnamese.

- Add larger models for Italian, Japanese, Spanish, and Vietnamese.

- Add alternative models for English (Gigaspeech) and Portuguese (FalaBrasil).

- New languages: Arabic, Polish, Uzbek, and Korean.

- Add German to ASR models from Mod9:

-

1.9.3 (2022 May 03):

- Add various ASR models from Vosk:

- Upgraded: Chinese, Dutch, French, Indian-accented English, and Spanish.

- New languages: Czech, Esperanto, Hindi, and Japanese.

- Add German ASR models from TUDA that improve accuracy and enable customization.

- Add various ASR models from Vosk:

-

1.9.2 (2022 Apr 25):

- Default values for

g2p-optionsare now correctly applied for thepronounce-wordscommand.

- Default values for

-

1.9.1 (2022 Mar 28):

- Fix regression bug introduced in version 1.7.0, related to handling of WAV files.

-

1.9.0 (2022 Mar 21):

- Support multi-channel audio streams.

- The number of channels will be parsed from a WAV-formatted header.

- Raw-formatted audio should specify the

channelsrequest option. - Engine replies may include a

"channel"field that is a 1-indexed channel number (as string type). - Online-mode recognition will allocate one processing thread per channel, subject to operator limits.

- Batch-mode recognition with

batch-intervalsmay be specified as JSON object:- Each key is a string-typed 1-indexed channel number.

- Each value is an array of 2-element arrays (i.e.

batch-intervalsformat for single-channel audio).

- The

get-infocommand now responds nearly instantly, with CPU utilization cached just prior to the request. - Fix a bug that could result in incorrectly counting the number of allocated threads.

- Support multi-channel audio streams.

-

1.8.1 (2022 Feb 18):

- Minor fix to improve the stability of results if the Engine operator enabled non-default optimizations.

-

1.8.0 (2022 Feb 16):

- New commands:

drop-grammaranddrop-words. - Add

"mkl"information to the reply of theget-infocommand:- If

mkl.data_typeis set to"int16", clients may notice overall speed improvement.

Results should be expected to differ insignificantly and non-deterministically. - If

mkl.reproducibilityis enabled, (andmkl.data_typeis"f32"), results should be reproducible.

However, the overall speed and throughput of the Engine may be degraded under this condition.

- If

- Fix a regression bug introduced in version 1.7.0: raw audio

encodingshould default topcm_s16le.

- New commands:

-

1.7.0 (2022 Feb 02):

- Add support for 24- and 32-bit linear little-endian PCM audio encoding, as well as 32-bit little-endian float.

-

1.6.1 (2022 Jan 06):

- The

transcript-alternativesoption is now limited to a maximum of 1,000; higher values are impractical. Consider using thephrase-alternativesrepresentation, which is better in nearly all regards. - Requests that involve G2P processing now have the applicable

"g2p_options"included in replies. - The

"context_seconds"reported byget-models-infowas incorrect (by a frame subsampling factor of 3).

- The

-

1.6.0 (2021 Dec 16):

- New functionality to automatically generate pronunciations:

- The

add-wordscommand and custom grammar functionality no longer require"phones"to be specified. For words that may have unusual pronunciations, a"soundslike"hint can also be specified. - This functionality is currently only supported for English ASR models from Mod9.

Note that these ASR models from Mod9 now require their phones to be specified as uppercase.

- The

- Add

g2p-modelrequest option, reflected as"g2p_model"in replies. - Add

g2p-costandg2p-optionsrequest options, which are highly advanced and esoteric. - New command:

pronounce-words, useful to audit pronunciations before automatically adding them. - The reply of the

get-infocommand has slightly different fields, including a count ofrequests.mutable. - Fix a minor bug: all replies now consistently report

"asr_model"and never"asr-model". - Fix a rare non-deterministic bug: sample rate conversion could drop samples at end of audio stream.

- New functionality to automatically generate pronunciations:

-

1.5.0 (2021 Nov 30):

- The

get-infocommand now replies withthreads.active, and the correct value forthreads.allocated. - Requests with

batch-threadsspecified may experience throttling when server is overloaded.

- The

-

1.4.0 (2021 Nov 04):

- Changes to the

resample-moderequest option:- The default value is now

"best"; it is faster and more accurate than the prior default. - The prior

"better"value is now implemented as"fast". - The prior

"fast"and"faster"values are now called"faster"and"fastest", respectively.

- The default value is now

- Speed improvement for some requests that generate alternatives or confidence scores.

- Changes to the

-

1.3.0 (2021 Oct 27):

- Improve NLP models, particularly with respect to punctuation and capitalization.

-

1.2.0 (2021 Oct 11):

- New request option for

recognizecommand:phrase-biases.- Phrases that should be recognized more accurately can be "boosted" with a positive bias value.

- Phrases that appear incorrectly can be "anti-biased" with a negative bias value (unlike Google STT).

- This is generally better than the

bias-wordscommand, and is enabled even if--models.mutable=false.

- New fields reported by

get-infocommand:-

"memory_gibibytes"now includes"peak"memory usage, as well as operator-configured"headroom". -

"limit"reports operator-configured limits on buffers sizes and timeouts applied when reading requests.

-

- New fields reported by

get-models-infocommand:-

"memory_gibibytes"reports the approximate memory usage of a model after it is loaded. -

"size"no longer reports the file sizes of model components; it now reports the number of parameters and input context size of acoustic models, as well as the number of arcs and states in the decoding graph.

-

- New command:

add-grammar(currently in experimental preview) - Minor feature improvements and bugs fixed:

- Improve capitalization of words that don't start with letters (with

transcript-formattedoption). - Acoustic models that use i-vectors could fail for certain

latencyvalues or batch-mode processing. - The

add-wordscommand could cause theword-alternativesoption to generate incorrect results.

- Improve capitalization of words that don't start with letters (with

- New request option for

-

1.1.0 (2021 Aug 11):

- Reconfigure ASR settings to mitigate worst-case memory usage. Transcripts may differ from prior versions.

-

get-versioncommand now replies with the"build"field as a string rather than a JSON object. -

get-infocommand now replies with separate counts of loaded ASR and NLP models.

-

1.0.1 (2021 Jul 31):

- Fix bug in which maximum number of concurrent requests was limited.

-

1.0.0 (2021 Jul 15):

- This first major version release represents the functionality and interface intended for long-term compatibility.

- Deprecate the

modelrequest option, which is nowasr-model; replies will reflect an"asr_model"field. - Add

nlp-modelrequest option, reflected as"nlp_model"in replies. - The

add-words,bias-words, andget-models-infocommands now reply with"added"and"biased"fields.

-

0.9.1 (2021 May 20):

- Minor bugfixes and improvements.

-

0.9.0 (2021 May 05):

- Automatic sample rate conversion, along with

resampleandresample-modeoptions. - Advanced option for

ivector-silence-weightif loading models with i-vectors. - Batch-mode processing no longer supports

latencyoption and is significantly faster. - Strict sub-option validation: e.g.

word-alternatives-confidencecannot betrueifword-alternativesis 0. - Bugfix:

phrase-alternativeshad been inconsistently sorted.

- Automatic sample rate conversion, along with

-

0.8.1 (2021 April 06):

- Improve acoustic model setting for 16k models.

- Improve number formatting for English models.

- The

get-infocommand reports number of add-words requests and server uptime in seconds. - Accept WAV files that report improper block alignment.

-

0.8.0 (2021 March 16):

- Major backwards-incompatible changes:

- The

transcript-intervalsoption now defaults tofalse. - The

word-alternatives-confidenceoption now defaults tofalse. - Relative AM/LM costs of transcript and phrase alternatives are nested under a

biasobject. - Add

transcript-alternatives-biasandphrase-alternatives-biasoptions, defaulting tofalse. - Rename

lexiconoption towords. -

get-statscommand renamed toget-info. -

shutdown,bias-words, andadd-wordscommands are operator-configurable, and disallowed by default.

- The

- Major backwards-incompatible changes:

-

0.7.1 (2021 February 19):

- Change default limit on threads per request to the number of CPUs available. This is operator-configurable.

-

0.7.0 (2021 February 16):

- New commands:

add-words,bias-words,lookup-word. - New request options:

content-length,transcript-confidence,transcript-intervals, and various advanced options. - Deprecate

confidenceandtimestampoptions (replaced byword-confidenceandword-intervals). - Add

word-alternatives-confidenceand renamedword-alternatives-confidence-min. - Rename

word-silence-confidence-maxandword-silence-duration-min. - Add

timeoutoption toshutdowncommand and deprecatedkillcommand. - Minor bugfixes and improvements, including memory management.

- New commands:

-

0.6.0 (2020 December 11):

- Simplified request options:

- Remove

batchand usebatch-threadsto enable multi-threaded batch processing mode. - Rename

*-alternatives-maxto*-alternatives, inferring state from the specified limit. - Note that

0means disabled, non-zero mean enabled, and-1means unlimited. - For example:

- Previously, might specify

"word-alternatives": true, "word-alternatives-max": 3 - Now, same behavior specified by

"word-alternatives": 3

- Previously, might specify

- Remove

- Support for multiple models:

- The client can view the currently loaded models using the new

get-models-infocommand. - The

modelrequest option may be specified with the name of a currently loaded model.

- The client can view the currently loaded models using the new

- New response field for

words_formatted:- Enabled when

transcript-formattedis requested withword-intervals. - Provides timestamps that enable audio playback even for words formatted as numbers or with punctuation.

- Enabled when

- Update application protocol:

- If a response message has status

"failed", it may include an explanation in theerrorfield. - This was previously reported in a field named

message.

- If a response message has status

- Simplified request options:

-

0.5.0 (2020 October 30):

- Improve transcript formatting of years and North American telephone numbers.

- Initial release of Python SDK and REST wrappers.

- Update application protocol: if the client doesn't send anything for 10s, this is considered an error.

-

0.4.2 (2020 October 8):

- Deprecate MKL thread-level parallelism, which can be impractical and confusing.

(In general, batch-mode distribution across threads is a better approach.)

- Deprecate MKL thread-level parallelism, which can be impractical and confusing.

-

0.4.1 (2020 September 25):

- New feature: Custom Grammars and Lexicons

-

0.4.0 (2020 August 17):

- New feature: recognition alternative hypotheses can be returned.

-

transcript-alternatives: traditional "N-best lists" at the segment-level -

word-alternatives: one-to-one word-level lists ranked by confidence -

phrase-alternatives: patent-pending representation with optimal tradeoffs

-

- Minor bugfixes and improvements.

- New feature: recognition alternative hypotheses can be returned.

-

0.3.1 (2020 June 30):

- Add support for A-law and μ-law WAVE formats.

- Add

batch-segment-minandbatch-segment-maxoptions. - Better disfluency removal in transcript formatting.

-

0.3.0 (2020 May 13):

- New feature: recognition can now be parallelized (batch mode).

- Rename default speech recognition command from

"decode"to"recognize". - Remove

segment-interval-maxandsegment-interval-minoptions. - Minor bugfixes and improvements.

-

0.2.5 (2020 Mar 10):

- Server is responsive during startup.

- Minor bugfixes and improvements.

-

0.2.4 (2020 Feb 20):

- New feature: transcript formatting.

- Improve handling of WAV format.

-

0.2.2 (2020 Jan 24):

- Minor bugfixes and improvements.

-

0.2.1 (2019 Nov 15):

- Improve recognition of words near segment endpoints.

- Rename

endpoint-maxtosegment-interval-maxand addedsegment-interval-min.

-

0.2.0 (2019 Sep 23):

- Initial release, privately shared with partners.

©2019-2025 Mod9 Technologies (Version 2.0.0)